40 million times a second, bunches of protons pass each other at nearly the speed of light in the four detectors along the 27-kilometer ring of the LHC, CERN's giant particle accelerator near Geneva. At each bunch crossing, by virtue of the equivalence between energy and mass, collisions between particles generate myriads of other particles whose properties provide information about the laws of our Universe.

During the data taking campaign that will begin on July 5, 2022, run 3, the number of particles contained in each bunch will be multiplied by two. The result will be a multiplication of the number of proton collisions per second, which will significantly increase the chances of revealing the contours of a new continent of the infinitely small amidst intense experimental noise.

These improvements in the LHC's performance, unprecedented since its commissioning in 2010, will leave scientists facing a deluge of data. This prospect has led them to fundamentally rethink how they conduct their analyses, from selecting the relevant information at the detectors to organizing its long-term archiving on the LHC's global network of computing sites.

Of the four main experiments running at the accelerator, LHCb and ALICE have gone furthest in revising their selection and recording systems. CMS and ATLAS have made significant adaptations, in anticipation of their planned "major refurbishment" after the current campaign.

Depending on the scientific objectives of each experiment, different strategies are implemented to sort and organize the collision data. For example, ATLAS and CMS, the two general-purpose detectors that notably discovered the Higgs boson in 2012, are looking for rare events. Consequently, the output of the majority of the hundreds of millions of collisions that occur per second in their crucible must be eliminated for the sole benefit of the events that may carry "interesting" physics. To do this, at each collision, the instantaneous analysis of a few brief data allows us to decide whether an event is relevant, in which case all the information concerning it is effectively recorded. If not, the detectors pass their turn.

In the case of ATLAS, for example, before run 3, only the information from its liquid argon calorimeter and its muon detector were systematically read. And even then, out of the 180,000 channels of the calorimeter, an integration of the data meant that only 5,000 independent analog signals were used to select events. "From this very fragmented information, including a superposition of different energy deposits where the products of several interactions were mixed, our vision of the events at this stage of the analysis remained relatively blurred, making it difficult, for example, to distinguish between hadrons and electrons," explains Georges Aad, at the Centre de Physique des Particles in Marseille and member of ATLAS.

With run 3, the number of quasi-simultaneous collisions of protons occurring at each crossing of proton bunches, every 25 nanoseconds, will increase from 40 to 80. On the other hand, the calorimeter has a much longer readout time than the beam crossing frequency and its detector triggering capability, about every 10 microseconds, will remain unchanged. The tracks left by about 2,400 interactions will therefore be superimposed on the detector electronics. There is only one solution: refine the selection.

To do this, new boards installed in the calorimeter electronics will make it possible to read up to 34,000 independent channels at the maximum rate. Better still, the resulting information will be immediately digitized before being analyzed by more "robust" algorithms implemented on a field-programmable gate array (FPGA). "In this way, we will be able to estimate more precisely the energy of each particle and better disentangle the different collisions," says the physicist. This will allow us to optimize the selection of the events to be kept for a later complete analysis.

LHCb increases the pace

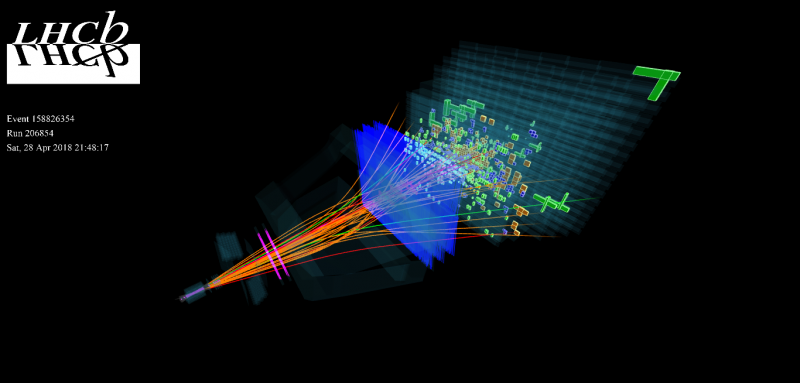

Just like ATLAS and CMS, the LHCb experiment has to make a drastic sorting among the collisions occurring in its giant detector. However, it has a particularity compared to the other experiments: the production rate of "bottom" hadrons, the particles that LHCb studies, is colossal and poses a problem of information flow to be processed. On the other hand, only a few particular decays are sought, which requires a particularly sophisticated sorting. For run 3, the collaboration has opted for a real revolution in the way they conduct their analyses, which will project this experiment dedicated to the study of the decays of bottom hadrons even beyond the campaign that is starting.

Until now, as in ATLAS, the decision to record or not the information specific to a collision was taken on the basis of very simple signatures. For example, a muon with a large momentum or a large energy deposit in the calorimeter. But this simplicity had a cost: the risk of "missing" some potentially interesting but more complex events, typically characterized by particles of modest energy.

However, as the number of collisions per crossing in LHCb will be increased to an average of six, compared to one previously, generating a combinatorial of events on each image, materialized by more than 200 tracks, this method has become obsolete. To replace it, the specialists have developed a system to analyze in real time all the information deposited on all the detectors that make up LHCb, allowing them to select events on the basis of a detailed analysis of each collision. As Renaud Le Gac, at the Marseille Center for Particle Physics and a member of LHCb, summarizes, "Before, the complete information on collisions was only read out at a frequency of 1 MHz. From now on, this complete reading will be done at the frequency of collisions, i.e. 40 MHz, implying the processing of 40 terabits of data per second, which is a first and a complete paradigm shift!"

To achieve this, this rain of data will be concretely conveyed from the detector's cavern by 11,000 optical fibers connected to 163 servers. Thanks to an FPGA board developed by the CPPM, the information associated with a single collision will first be aggregated. Then, thanks to a heterogeneous software framework and algorithms designed by the CPPM and the Laboratoire de Physique Nucléaire et des Hautes Energies, in Paris, and implemented on graphics processingunits (GPUs), the whole being able to process 5 terabytes per second, the tracks will be partially reconstructed and the particles identified.

As Dorothea vom Bruch, also at the Marseille laboratory, explains, "GPUs are processors initially developed for image processing. Efficient and relatively inexpensive, they are very well suited to a combinatorial analysis in parallel of thousands of collisions”. "We are among the first to use them for a physics analysis and to demonstrate that it works," his colleague Renaud Le Gac is pleased to say. At the end of this first layer of analysis, the rate of selected events will be reduced to 1 MHz, which will then be written to disks where the write flow will be 100 gigabytes per second.

At the same time, the servers will retrieve the calibration data used for the rapid reconstruction phase, but also for the next phase dedicated to the reconstruction of all the selected events. "This second phase will be carried out on conventional CPUs, but on the basis of recast and parallelized algorithms," explains Dorothea vom Bruch. The data resulting from this processing will then be permanently stored on CERN's servers at a rate of 10 gigabytes of data per second, ready for long-term analysis.

A completely new infrastructure for ALICE

This completely new analysis infrastructure, known as triggerless, is also the one adopted by ALICE for run3. Dedicated to the study of the properties of the plasma of quarks and gluons (QGP), an extreme state of matter, this experiment consists in collecting the swarms of particles that escape from the QGP as it cools down before disappearing. In this sense, ALICE is interested in the collective properties of events and seeks to measure the product of each collision. However, in previous campaigns, its detector was not able to work at the maximum rate allowed by the accelerator. But after an intense rejuvenation, the goal is to increase the data rate by a factor of 10, bringing it to about 50 kHz. As Stefano Panebianco, a member of the CEA and a member of ALICE, explains, "Our online analysis system will allow us to retrieve data at the rate of a few terabits per second, before analyzing them on a farm of 500 servers, each containing 10 GPU and CPU cores, which will allow us to detect exotic and/or rare particles."

Data transfers between sites of the international computing and storage grid for the LHC (WLCG)

Beyond these online analyses, no matter how complex, the long-term work on the data, which leads to scientific discoveries and publications, is carried out on the CERN grid, or more precisely the Worldwide LHC computing grid (WLCG). This incredible infrastructure, spread over 170 sites in 42 countries and three continents, offers LHC physicists nothing less than a million computing cores and two exabytes of storage. Better still, it allows perfectly transparent access to the data and the software used to analyze them, without having to worry about where they are physically located around the world.

The IN2P3 computing center in Lyon is one of the 13 main sites, in addition to CERN's, that make up the global grid, hosting about 10% of all LHC data. As Sabine Crépé-Renaudin, IN2P3's deputy scientific director for computing and data, explains, "Unlike the detectors and the accelerator, we never take a break. The grid must evolve continuously while continuing to operate."

The hundreds of specialists who maintain the grid, without which the LHC's data would remain silent, are constantly renewing and adapting the storage media on disks or tapes, CPUs, databases, analysis software, middleware for communication and inter-site transfers. And this, in a context where the demand for resources is increasing exponentially: "Until now, the computing and storage capacities of the WLCG have increased by about 15% per year, and will grow even more in the coming years," explains the physicist. She adds: "For run 3, the teams at the Lyon Computing Center changed the tape storage systems, the information access protocols and the authentication protocols. We have also adapted our computation models to the evolution of technologies, and carried out data challenges to ensure that our infrastructures are correctly sized for data transfers between sites.”

Beyond that, run 3 promises to be a tremendous opportunity to prepare for the advent of the high-luminosity LHC, the HL-LHC, in 2029, which will see the volume of data to be processed increase by a factor of 5 to 10. "For the moment, we don't know how to do it," admits Sabine Crépé-Renaudin. Throughout the current campaign, the R&D effort will therefore be deployed in all directions: FGPA articulation, CPU, GPU and high-performance computing in connection with the ramp-up of artificial intelligence algorithms based on neural networks; optimization of data security; not to mention issues of energy efficiency.

In the meantime, from grid specialists to experts in detector electronics and selection algorithms, as a new campaign of data taking that could be decisive for physics begins, everyone has only one objective: "all this is to do the best physics possible, with the potential to make discoveries that could revolutionize physics," enthuses Sabine Crépé-Renaudin. The LHC has probably never been so ready.

Translated from an article written by Mathieu Grousson (les Chemineurs), published here